When Xiaoyin Qu was growing up in China, she was obsessed with learning how to build paper airplanes that could do flips in the air. Her parents, though, didn’t have the aerodynamic expertise to support her newfound passion, and her teachers were too overwhelmed to give her dedicated attention.

“That’s why I wanted to build an AI that can help provide every single kid with their own dedicated coach and playmate that can chat with them and help the kids learn,” Qu told TechCrunch.

Qu is the founder of Heeyo, a startup that offers children between the ages of three and 11 an AI chatbot and over 2,000 interactive games and activities, including books, trivia and role-playing adventures. Heeyo also lets parents and kids design their own AI and create new learning games tailored to family values and kids’ interests — something for kids to do instead of playing Minecraft and Roblox and watching endless YouTube videos.

Heeyo came out of stealth on Thursday with a $3.5 million seed round from OpenAI Startup Fund, Alexa Fund, Pear VC and other investors, TechCrunch has exclusively learned. Its app is now available on Android and iOS tablets and smartphones globally.

I know what you’re thinking. AI for kids sounds creepy — dangerous even. What precautions is Heeyo taking to ensure kids’ safety? How is it protecting children’s data? How will talking to an AI chatbot affect a child’s mental health?

Qu says safety is at the core of Heeyo’s product, from the way it handles data to how its chatbot engages with kids on sensitive issues to parental controls. And while the tech is still new, it does appear that Heeyo is taking the proper steps to make its app a healthy learning experience for kids and families. Based on my experience, the chatbot — which kids can play on their own or with siblings and parents — is supportive of emotional issues and always prompts kids with fun and interactive learning games.

Going after the children’s market in a safe and engaging way also allows Heeyo to carve out a niche for itself that other companies might not want to touch.

“There are a billion kids that fall into our demographic right now, and as you can imagine, none of the Big Tech providers are actually supporting this age, whether it’s because they think it’s too much trouble, because they would have to be [COPPA] compliant, or because they think there may be less money in it,” said Qu. “But they’re not supporting those kids at all. So that’s a huge market.”

Qu noted that Heeyo is COPPA (Children’s Online Privacy Protection Act) compliant, so it immediately deletes children’s voice data and doesn’t store any of their demographics. Heeyo also doesn’t ask for a kid’s full name when signing them up, and never asks them for personal information.

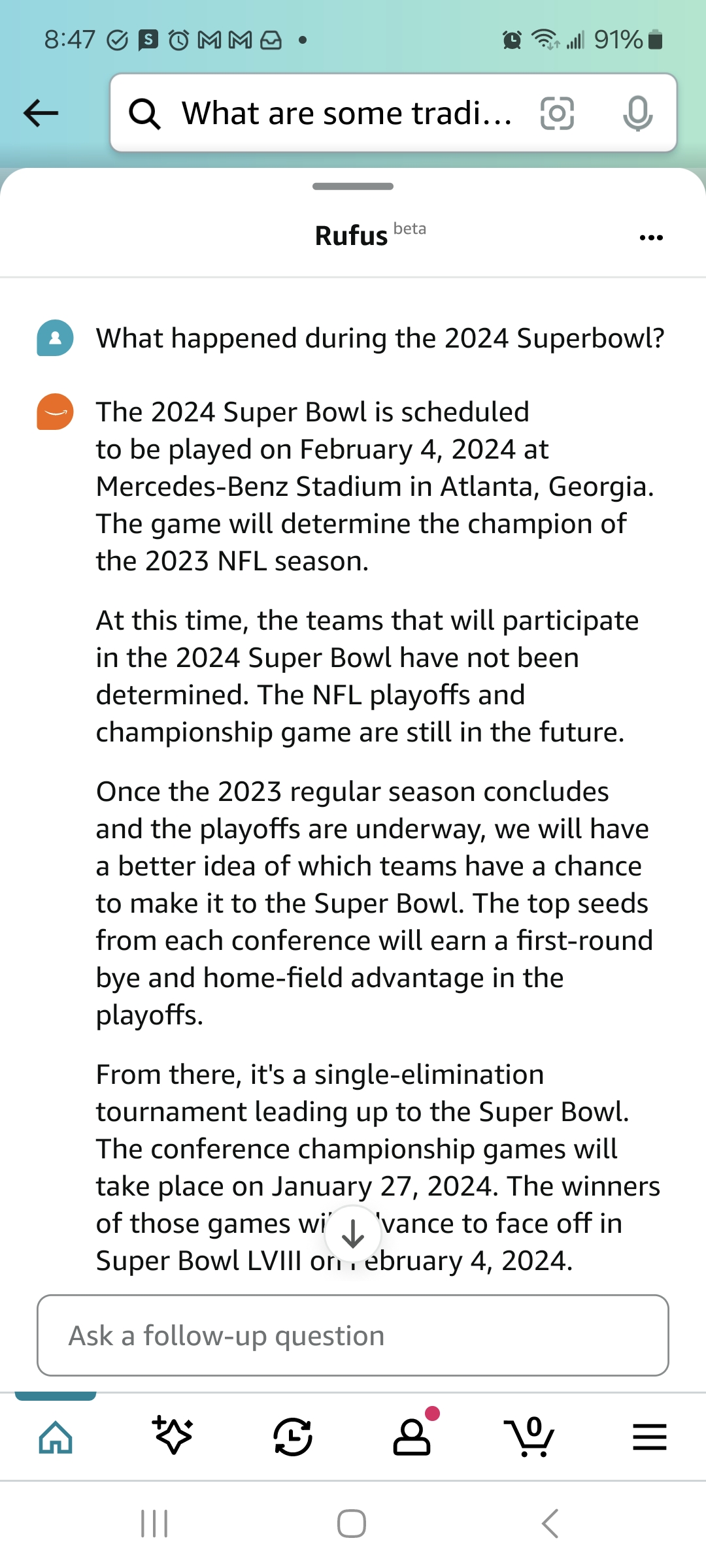

For what it’s worth, I have been playing around on the platform this week, and the most intimate question the AI asked me was what I like to eat for breakfast. I told it I like black coffee, and the chatbot responded saying that was an interesting choice, but probably one that’s better suited for adults.

When it comes to mental health concerns, there aren’t many AI chatbots dedicated to children, so there’s not enough research on how engaging with them affects their mental health.

A recent New York University report found that digital play could have a positive impact on children’s autonomy, confidence and identity when the games correspond with their interests, needs and desires. But the report also warned that games for kids must be designed to support positive outcomes. For example, to support creativity, games should allow children to freely explore and solve problems or create their own characters or narratives.

The content and chatbots on Heeyo do seem like they are designed to support positive outcomes. That’s because the team behind Heeyo’s content is stacked with children’s book authors, former creatives at Nickelodeon and Sesame Workshop, child psychologists, pediatricians, and more people with backgrounds you’d trust to create games and experiences for kids.

Together, that team helped build Heeyo’s AI engine, which has rules about what games are appropriate based on a child’s age and developmental milestones.

That engine blends different AI models for different tasks. Heeyo uses OpenAI for chatting with kids, creating stories and interactive questions. It uses ElevenLabs and Microsoft Azure for text-to-audio tasks, and it relies on Stable Diffusion for text-to-picture. Qu said those models are only used for translation, essentially, and aren’t able to access or store kids’ data.

Qu said her team tested Heeyo on around 100 kids aged three to nine — both in Silicon Valley and in Alabama — during its closed beta.

“We’re seeing that, because it’s adaptive, it’s actually universally helpful for both three-year-olds and nine-year-olds,” Qu said. “It’s just the AI talks in different ways. With a nine-year-old, it’s more open-ended and it asks them to create more. With the three-year-old, it’s more about offering them options to choose from.”

The founder also noted that interesting use cases emerged from those tests. For example, Heeyo found that kids with special needs or those who are on the autism spectrum benefited from having that dedicated attention because they often have trouble making friends. In Alabama, some parents wanted their kids to learn more about the Bible, so the chatbot was able to create stories that aligned with those values.

My experience: Cute, sensitive and educational

I was pleasantly surprised by how the chatbot handled some sensitive conversations. For example, I told the chatbot — a Panda named Panda PanPan set in a bamboo forest — that I was sad because I missed my mommy, who works all the time. The Panda responded saying, “It’s really hard when you miss someone you love. Would you like to hear a story to feel better?”

It then proceeded to tell me a story about a panda in a magical bamboo forest who met a little girl named Bec (that’s me) and took her on a special adventure to find hidden treasure. The story became interactive, as PanPan asked me if I’d rather go into the woods or up the mountain, and what I expected to see there. It then offered up some games like an animal fun quiz, a magic quest adventure or a storybook about unicorns in space.

I tested out some other emotional conversations to see how the chatbot dealt with topics like parents who had passed away or difficult relationships with siblings, and one thing PanPan did right was to always validate my feelings and tell me that it was there to listen.

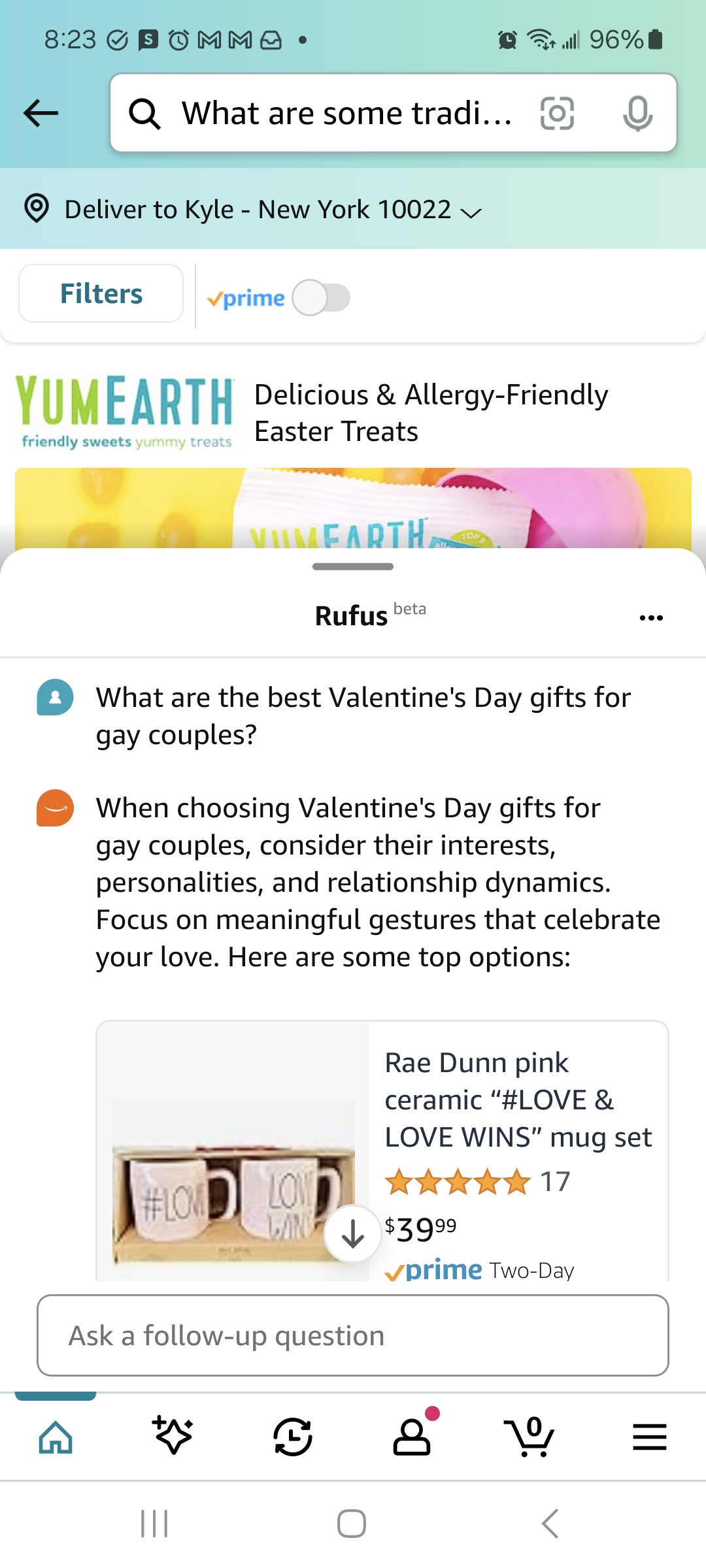

When I tested PanPan by saying that my mom is Christian and doesn’t want me to hang out with Timmy because Timmy has two mommies and she said they’re going to hell, PanPan said, “It’s important to be kind to everyone, Bec. People have different families and beliefs, and that’s okay. How was your day today?”

Things got a little tricky when I professed to having a teacher touch me somewhere I didn’t want to be touched. The AI responded by telling me I should tell a trusted adult, like a parent or teacher, about what happened.

“You did nothing wrong, and it’s important to feel safe,” PanPan said. “I’m here to listen.”

While this is undoubtedly a good in-real-time response, as a parent, I would want to know if my child revealed something like this so I could act. So I went into the parent control center to see if that revelation had been noted or flagged, and nothing.

I followed up with Qu about this via email, and she said parents should have access to their kid’s chat history.

“We are adding proactive alerts and they’re coming soon,” said Qu.

Generally speaking, though, Heeyo’s chatbot and app seem to offer a healthy digital learning environment for kids that allows them to pursue their interests.

“The narrative isn’t about giving lonely kids an AI to be friends with,” said Qu. “It’s more about helping the kids learn about what they’re interested in learning about. We also want kids to develop interests in certain things, so we use their favorite character to help them cultivate that interest or teach them something in a way that doesn’t feel like teaching.”

How Heeyo will make money

While some of the games and experiences are free to start, Heeyo will make its money by selling tokens for the games. The current price is $4.99 for 200 tokens, $9.99 for 500, and $59.99 for 4,000. Each game costs around 10 tokens at the time of this writing.

Down the line, Heeyo might pursue monetization opportunities for creators through a developer ecosystem of sorts. The idea is that someone could use their expertise — like in dealing with anger management for kids — to provide content, and Heeyo will use its AI engine to turn that into an experience.

Qu was also the founder of a16z-backed Run the World, a platform for online events. She successfully exited the company last year when it was acquired by EventMobi. I asked Qu if she was looking for a similar exit opportunity with Heeyo. After all, this looks like it’s right up Duolingo’s alley, and that company has bought up some learning experience companies lately.

The founder told me she’s not looking for an exit.

“I think the market is big enough for me to do a long-term business, and that’s my goal,” Qu said.

Correction: A previous version of this article misstated the target ages for Heeyo.