Trending News

17 October, 2024

8.3°C New York

Since launching in 2017, Retool has made a name for itself as one of the premier low-code tools for building browser-based internal line-of-business applications. The well-funded startup’s service is now used at thousands of companies, including Amazon, OpenAI, Pinterest, Plaid, Snowflake, Taco Bell and Volvo. Now, it’s expanding its focus from internal apps to also include external apps. Aptly named “Retool for External Apps,” this new service is now generally available and aims to make it easy for any business to quickly and efficiently build secure and performant apps for a far wider audience than before.

As Retool CEO and co-founder David Hsu told me, quite a few companies already started using External during its preview phase. Among those are quite a few larger businesses like Orangetheory, for example, which provided a Retool app to over 1,600 of its studio managers, as well as quite a few startups that are using the service to build MVPs while trying to find product/market fit.

For the most part, we’re not talking about consumer applications. While it’s possible to build these — and some people are — Retool’s focus is on business apps, not the next social network.

“The core idea behind Retool is basically that all internal tools have the same building blocks. They all are made up of buttons, forms, tables — stuff like that. Basically, the really cool thing that we’ve learned about applying Retool to external business software is that actually, external business software is also remarkably similar, especially the more operational external software,” Hsu said.

Most of the software written for business users today, whether internal or external, are basic CRUD apps that read and write to a database. They may differ in how they present data, but the overall functionality doesn’t vary all that much between apps. However, the vast majority of the world’s developers work on building exactly these kinds of apps from the same building blocks.

“What’s really remarkable is that, just like for internal tools, the way that people build these CRUD apps today is so primitive,” Hsu said. “You basically use React and you build it from scratch. It’s kind of shocking that people are doing this day in and day out. … We’ve realized that a lot of the learnings that we have for internal software also apply to external software.”

Building for an external audience is a bit different, though, in that things like branding, performance and the overall look and feel matter quite a bit more. But there, too, users of internal apps now expect those apps to work just like consumer apps, even if there’s still a bit more leeway there to prioritize function over form.

What you definitely can’t ignore when building external apps is security. For this, Retool added the necessary building blocks to provide authentication and authorization features. Hsu also noted that for external apps, most developers tend to use more APIs than databases, maybe in part because that gives them more control over how data is accessed.

It’s worth noting that Retool is also enabling businesses to embed new Retool apps into existing apps using its existing React and newly launched JavaScript SDKs. Retool also added features to enable invite and onboarding flows, including the ability to send custom emails from the user’s email provider of choice.

“Shipping good software to external users means builders have to think about user-facing features that often aren’t as mission-critical when the tool is only used internally. This includes customizing how users onboard and navigate through applications. Security considerations become paramount with login, password reset flows and granular permissions,” said Antony Bello, a senior product manager at Retool. “Retool for External Apps puts design flexibility and customization at the forefront so that customers can easily build white-labeled apps for external users without sacrificing security or user experience.”

In its early days, Retool’s mission was to “change the way software is built.” As Hsu noted, that left people wondering: “So what? Is it for the better? Is it for the worse?” Earlier this year, the company changed its mission to focus on bringing “good software to everyone.” With that, it also published its definition of what constitutes good software (performant, reliable, secure, etc.) and indeed, Hsu says that Retool wants its framework to become more performant than React. He believes that’s quite possible because Retool can focus on a smaller set of use cases.

In 2021, Oleg Klimov, Vlad Guber and Oleg Kiyashko set out to co-create a platform, Refact.ai, that could convince more companies to embrace GenAI for coding by affording users more customization — and control — over the experience.

Klimov and Kiyashko had worked together for nearly a decade building AI-based systems for image recognition and security systems. Guber knew Kiyashko from childhood; they were neighbors in the South Ukrainian town of Yuzhnoukrainsk.

“It was clear that AI would change the very notion of what engineering is,” Klimov told TechCrunch in an email interview. “As software engineers at heart, we decided we need to be in the best position to live through it — creating an independent system for software engineering.”

Most devs acknowledge the AI-driven seismic shifts occurring in their profession. Eighty-two percent responding to a recent HackerRank poll said that they believe AI will “redefine” the future of coding and software development.

The majority are embracing the change, with 63% of devs in VC firm HeavyBit’s 2023 survey saying that they’re now using GenAI in coding tasks. But employers are more skeptical. In a separate survey of enterprise C-suite and IT professionals, 85% expressed concerns about GenAI’s privacy and security risks.

Companies, including Apple, Samsung, Goldman Sachs, Walmart and Verizon, have gone so far as to limit internal use of GenAI tools over fears of data compromise.

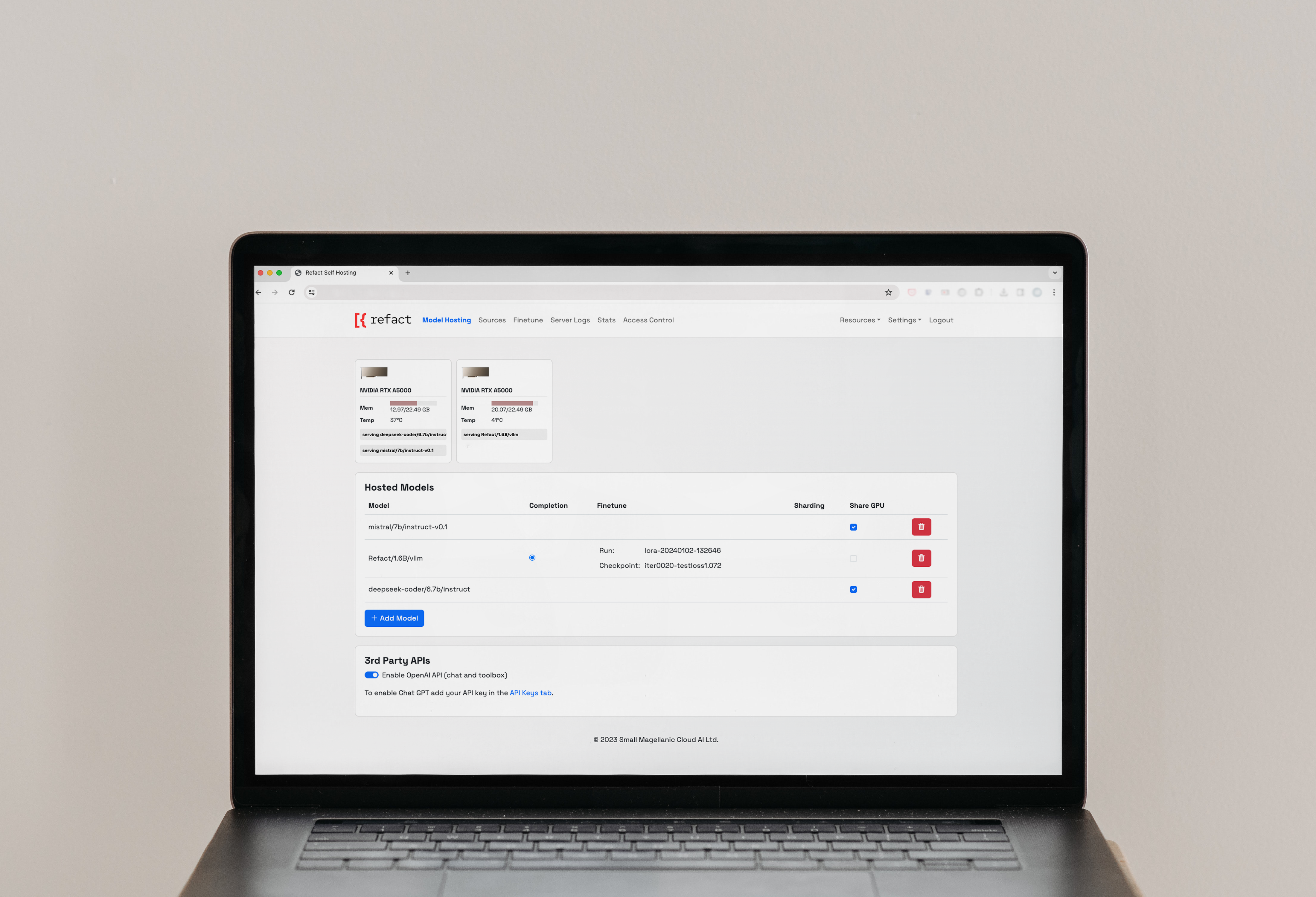

So how’s Refact different? It runs on-premise, Klimov says.

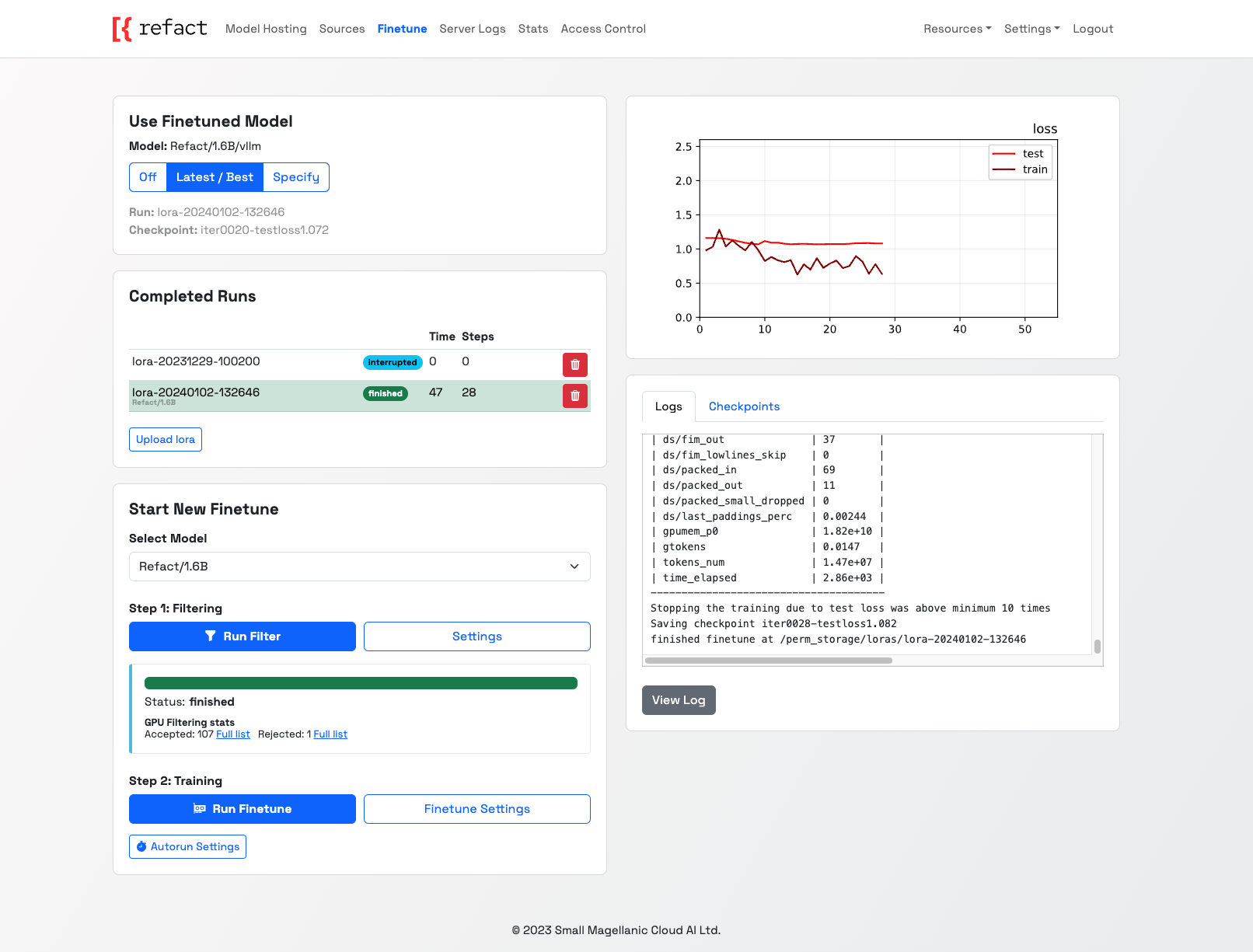

Like GitHub Copilot, Amazon CodeWhisperer and other major GenAI coding assistants, Refact can answer natural language questions about code (e.g. “When was this dependency last updated?”), recommend lines of code and fine-tune to improve its performance with a given codebase.

“One way to think about it is as a ‘strong junior engineer,’” Klimov said, “or an artificial co-worker on a team that’s productive but needs supervision.”

However, unlike many — if not most — of its competitors, Refact doesn’t need an internet connection. It doesn’t even upload basic telemetry data, Klimov claims.

“We’re developing better controls and processes around sources and uses of data, security and privacy as we’re aware of the challenges that [enterprises] face and want to ensure the integrity of our customers’ information and innovative breakthroughs,” Klimov said.

Powering Refact’s platform are compact, code-generating models trained on permissively licensed code — another key competitive advantage, Klimov claims. Some code-generating tools trained using copyrighted or otherwise restrictively licensed code have been shown to regurgitate that code when prompted in a certain way, posing a potential liability risk for the companies deploying them (at least according to some IP experts).

Vendors like GitHub and Amazon have introduced settings and policies aimed at allaying the fears of companies wary of the IP challenges around their GenAI coding tools. But it’s unclear that they’ve made much headway. In a 2023 survey of Fortune 500 businesses by Acrolinx, nearly a third said that intellectual property was their biggest concern about the use of generative AI.

“We used permissive licence code to train [our models] because our customers demanded it,” Klimov said.

Refact’s privacy- and IP-conscious approach helped it raise $2 million in funding from undisclosed investors — and net ~20 pilot projects with enterprise customers. Klimov claims that the platform, which is also available in a cloud-hosted plan that starts at $10 per seat per month, is revenue-generating and currently on track to earn “a few million” annually by this summer.

That’s impressive, considering vendors like GitHub have struggled to make a profit on their code-generating tools. Copilot was reportedly costing GitHub parent Microsoft up to $80 per user per month as a result of the associated cloud processing overhead.

The focus for the London-based, eight-person Refact team in the near future is upgrading Refact to run code autonomously, execute “multi-step” plans and self-test code.

“We’re actively working on a next-generation AI assistant – one that’ll debug the code it writes and operate on any large codebase,” Klimov said. “We’re well-funded internally and have the necessary capital to continue building the product … We’ve never benefited from an abundance of funding or from the venture capital frenzy that took place in previous years, but what’s really benefited us is the availability and eagerness of very talented people who are looking to join the AI revolution — and who saw in Refact as a place to thrive and develop something that can have a lasting impact.”

Developers are adopting AI-powered code generators — services like GitHub Copilot and Amazon CodeWhisperer, along with open access models such as Meta’s Code Llama — at an astonishing rate. But the tools are far from ideal. Many aren’t free. Others are, but only under licenses that preclude them from being used in common commercial contexts.

Perceiving the demand for alternatives, AI startup Hugging Face several years ago teamed up with ServiceNow, the workflow automation platform, to create StarCoder, an open source code generator with a less restrictive license than some of the others out there. The original came online early last year, and work has been underway on a follow-up, StarCoder 2, ever since.

StarCoder 2 isn’t a single code-generating model, but rather a family. Released today, it comes in three variants, the first two of which can run on most modern consumer GPUs:

A 3-billion-parameter (3B) model trained by ServiceNowA 7-billion-parameter (7B) model trained by Hugging FaceA 15-billion-parameter (15B) model trained by Nvidia, the newest supporter of the StarCoder project

(Note that “parameters” are the parts of a model learned from training data and essentially define the skill of the model on a problem, in this case generating code.)

Like most other code generators, StarCoder 2 can suggest ways to complete unfinished lines of code as well as summarize and retrieve snippets of code when asked in natural language. Trained with 4x more data than the original StarCoder (67.5 terabytes versus 6.4 terabytes), StarCoder 2 delivers what Hugging Face, ServiceNow and Nvidia characterize as “significantly” improved performance at lower costs to operate.

StarCoder 2 can be fine-tuned “in a few hours” using a GPU like the Nvidia A100 on first- or third-party data to create apps such as chatbots and personal coding assistants. And, because it was trained on a larger and more diverse data set than the original StarCoder (~619 programming languages), StarCoder 2 can make more accurate, context-aware predictions — at least hypothetically.

“StarCoder 2 was created especially for developers who need to build applications quickly,” Harm de Vries, head of ServiceNow’s StarCoder 2 development team, told TechCrunch in an interview. “With StarCoder2, developers can use its capabilities to make coding more efficient without sacrificing speed or quality.”

Now, I’d venture to say that not every developer would agree with de Vries on the speed and quality points. Code generators promise to streamline certain coding tasks — but at a cost.

A recent Stanford study found that engineers who use code-generating systems are more likely to introduce security vulnerabilities in the apps they develop. Elsewhere, a poll from Sonatype, the cybersecurity firm, shows that the majority of developers are concerned about the lack of insight into how code from code generators is produced and “code sprawl” from generators producing too much code to manage.

StarCoder 2’s license might also prove to be a roadblock for some.

StarCoder 2 is licensed under the BigCode Open RAIL-M 1.0, which aims to promote responsible use by imposing “light touch” restrictions on both model licensees and downstream users. While less constraining than many other licenses, RAIL-M isn’t truly “open” in the sense that it doesn’t permit developers to use StarCoder 2 for every conceivable application (medical advice-giving apps are strictly off limits, for example). Some commentators say RAIL-M’s requirements may be too vague to comply with in any case — and that RAIL-M could conflict with AI-related regulations like the EU AI Act.

In response to the above criticism, a Hugging Face spokesperson had this to say via an emailed statement: “The license was carefully engineered to maximize compliance with current laws and regulations.”

Setting all this aside for a moment, is StarCoder 2 really superior to the other code generators out there — free or paid?

Depending on the benchmark, it appears to be more efficient than one of the versions of Code Llama, Code Llama 33B. Hugging Face says that StarCoder 2 15B matches Code Llama 33B on a subset of code completion tasks at twice the speed. It’s not clear which tasks; Hugging Face didn’t specify.

StarCoder 2, as an open source collection of models, also has the advantage of being able to deploy locally and “learn” a developer’s source code or codebase — an attractive prospect to devs and companies wary of exposing code to a cloud-hosted AI. In a 2023 survey from Portal26 and CensusWide, 85% of businesses said that they were wary of adopting GenAI like code generators due to the privacy and security risks — like employees sharing sensitive information or vendors training on proprietary data.

Hugging Face, ServiceNow and Nvidia also make the case that StarCoder 2 is more ethical — and less legally fraught — than its rivals.

All GenAI models regurgitate — in other words, spit out a mirror copy of data they were trained on. It doesn’t take an active imagination to see why this might land a developer in trouble. With code generators trained on copyrighted code, it’s entirely possible that, even with filters and additional safeguards in place, the generators could unwittingly recommend copyrighted code and fail to label it as such.

A few vendors, including GitHub, Microsoft (GitHub’s parent company) and Amazon, have pledged to provide legal coverage in situations where a code generator customer is accused of violating copyright. But coverage varies vendor-to-vendor and is generally limited to corporate clientele.

As opposed to code generators trained using copyrighted code (GitHub Copilot, among others), StarCoder 2 was trained only on data under license from the Software Heritage, the nonprofit organization providing archival services for code. Ahead of StarCoder 2’s training, BigCode, the cross-organizational team behind much of StarCoder 2’s roadmap, gave code owners a chance to opt out of the training set if they wanted.

As with the original StarCoder, StarCoder 2’s training data is available for developers to fork, reproduce or audit as they please.

Leandro von Werra, a Hugging Face machine learning engineer and co-lead of BigCode, pointed out that while there’s been a proliferation of open code generators recently, few have been accompanied by information about the data that went into training them and, indeed, how they were trained.

“From a scientific standpoint, an issue is that training is not reproducible, but also as a data producer (i.e. someone uploading their code to GitHub), you don’t know if and how your data was used,” von Werra said in an interview. “StarCoder 2 addresses this issue by being fully transparent across the whole training pipeline from scraping pretraining data to the training itself.”

StarCoder 2 isn’t perfect, that said. Like other code generators, it’s susceptible to bias. De Vries notes that it can generate code with elements that reflect stereotypes about gender and race. And because StarCoder 2 was trained on predominantly English-language comments, Python and Java code, it performs weaker on languages other than English and “lower-resource” code like Fortran and Haskell.

Still, von Werra asserts it’s a step in the right direction.

“We strongly believe that building trust and accountability with AI models requires transparency and auditability of the full model pipeline including training data and training recipe,” he said. “StarCoder 2 [showcases] how fully open models can deliver competitive performance.”

You might be wondering — as was this writer — what incentive Hugging Face, ServiceNow and Nvidia have to invest in a project like StarCoder 2. They’re businesses, after all — and training models isn’t cheap.

So far as I can tell, it’s a tried-and-true strategy: foster goodwill and build paid services on top of the open source releases.

ServiceNow has already used StarCoder to create Now LLM, a product for code generation fine-tuned for ServiceNow workflow patterns, use cases and processes. Hugging Face, which offers model implementation consulting plans, is providing hosted versions of the StarCoder 2 models on its platform. So is Nvidia, which is making StarCoder 2 available through an API and web front-end.

For devs expressly interested in the no-cost offline experience, StarCoder 2 — the models, source code and more — can be downloaded from the project’s GitHub page.

AI might be the “it thing” of the moment. But that doesn’t mean it’s getting easier to deploy.

According to a 2023 S&P Global survey, about half of companies with at least one AI project in production are still at the pilot or proof-of-concept stages. The reasons for the slow ramp-ups vary, but the commonly cited ones are challenges around data management, security and compute resources.

Of businesses responding to the S&P poll, around half said they aren’t ready to implement AI — and won’t be for five years or more.

Fortunately, there’s an increasing number of products from startups and Big Tech vendors alike that aim to tackle these AI deployment roadblocks. (See ML Hub, Kore.ai and Viso, to name a few.) One of the newer entrants is Pienso, a platform that lets users build and deploy models without having to write code.

Birago Jones and Karthik Dinakar founded Pienso in 2016 based on their research at MIT (they’re alumni). The two met a few years back at MIT’s Media Lab as graduate students.

“We teamed up for a class project to build a tool that would help social media platforms moderate and flag bullying content,” Jones, who serves as Pienso’s CEO, told TechCrunch in an interview. “There was just one problem: While the model itself worked the way it was supposed to, it wasn’t trained on the right data, so it wasn’t able to identify harmful content that used teenage slang.”

Jones and Dinakar eventually realized that the solution was to have subject-matter experts — in this case, teenagers — help train the model. They built tools for this purpose, and some years later, Jones and Dinakar teamed up to commercialize these tools.

What resulted was Pienso, which Jones describes as an AI suite built for “non-technical talent” — namely researchers, marketers and customer support teams who have access to large amounts of data for AI training, but lack the necessary resources to structure and analyze it.

“So much of the AI conversation has been dominated by … large language models,” Jones said, “but the reality is that no one model can do everything. To be able to scale to AI’s full potential, where it can manage business processes and interact with customers, you have to be able to train and fine-tune your model. Pienso believes that any domain expert, not just an AI engineer, should be able to do just that.”

Pienso guides users through the process of annotating or labeling training data for pre-tuned open source or custom AI models. (It depends on the model, but AI generally needs labels — like an image of a bird paired with the label “finch” — to learn to perform a task.) The platform, which can be deployed in the cloud or on-premises, integrates with enterprise systems through APIs. But it can also operate without APIs or third-party services, keeping data within a secure environment.

Sky, the U.K. broadcaster, is using Pienso to analyze customer service calls, Jones says, while an unnamed U.S. government agency has tested it to monitor illegal weapons tracking.

“Pienso’s flexible, no-code interface allows teams to train models directly using their own company’s data,” Jones said. “This alleviates the privacy concerns of using … models, and also is more accurate, capturing the nuances of each individual company.”

Companies pay Pienso a yearly license based on the number of AI models they deploy. The greater the number of models, the higher the licensing cost.

“We intentionally designed our pricing to allow customers to test out models beforehand to understand how AI can help them without first having to make a sizable investment,” Jones added. “We wanted to give customers the freedom to experiment with building new models before deploying them.”

It’s a business model that appeals to investors, apparently. Pienso recently raised $10 million in a Series A funding round led by Latimer Ventures with participation from Gideon Capital, SRI, Uncork and Good Growth Capital.

With Pienso raising a total of $17 million, Jones says the new cash will be put toward scaling up Pienso’s sales, marketing and customer success teams, recruiting engineering talent and building new features for the platform.

Said Latimer Ventures’ Luke Cooper in a statement: “We constantly hear about the need to democratize AI, but what makes Pienso stand out is how they’re thinking about the role of a domain expert in this equation. They’re empowering those who understand their data best to get the most insights out of it. It’s fostering a future where we’re building smarter AI models for a specific application, by the people who are most familiar with the problems they are trying to solve.”