YouTube is going to limit teens’ exposure to videos that promote and idealize a certain fitness level or physical appearance, the company announced on Thursday. The safeguard first rolled out in the U.S. last year and is now being introduced to teens globally.

The announcement comes as YouTube has faced criticism over the past few years for potentially harming teens and exposing them to content that could encourage eating disorders.

The type of content that YouTube will limit exposure to includes videos that compare physical features and idealize certain fitness levels, body types and weight. Separately, YouTube will also limit exposure to videos that display “social aggression” in the form of non-contact fights and intimidation.

The Google-owned platform notes that this type of content may not be as harmful as a single video, but if the content is repetitively shown to teens, then it could become problematic. To combat this, YouTube will limit repeated recommendations of videos related to these topics.

Since YouTube’s recommendations are driven by what users tend to watch and engage with, the company needs to introduce these safeguards to protect teens from being repeatedly exposed to the content even if it adheres to YouTube’s guidelines.

“As a teen is developing thoughts about who they are and their own standards for themselves, repeated consumption of content featuring idealized standards that starts to shape an unrealistic internal standard could lead some to form negative beliefs about themselves,” YouTube’s global head of health, Dr. Garth Graham, said.

Thursday’s announcement comes a day after YouTube introduced a new tool that allows parents to link their accounts to their teen’s account in order to access insights about the teen’s activity on the platform. After a parent has linked their account with their teen’s, they will be alerted to their teen’s channel activity, such as the number of uploads and subscriptions they have.

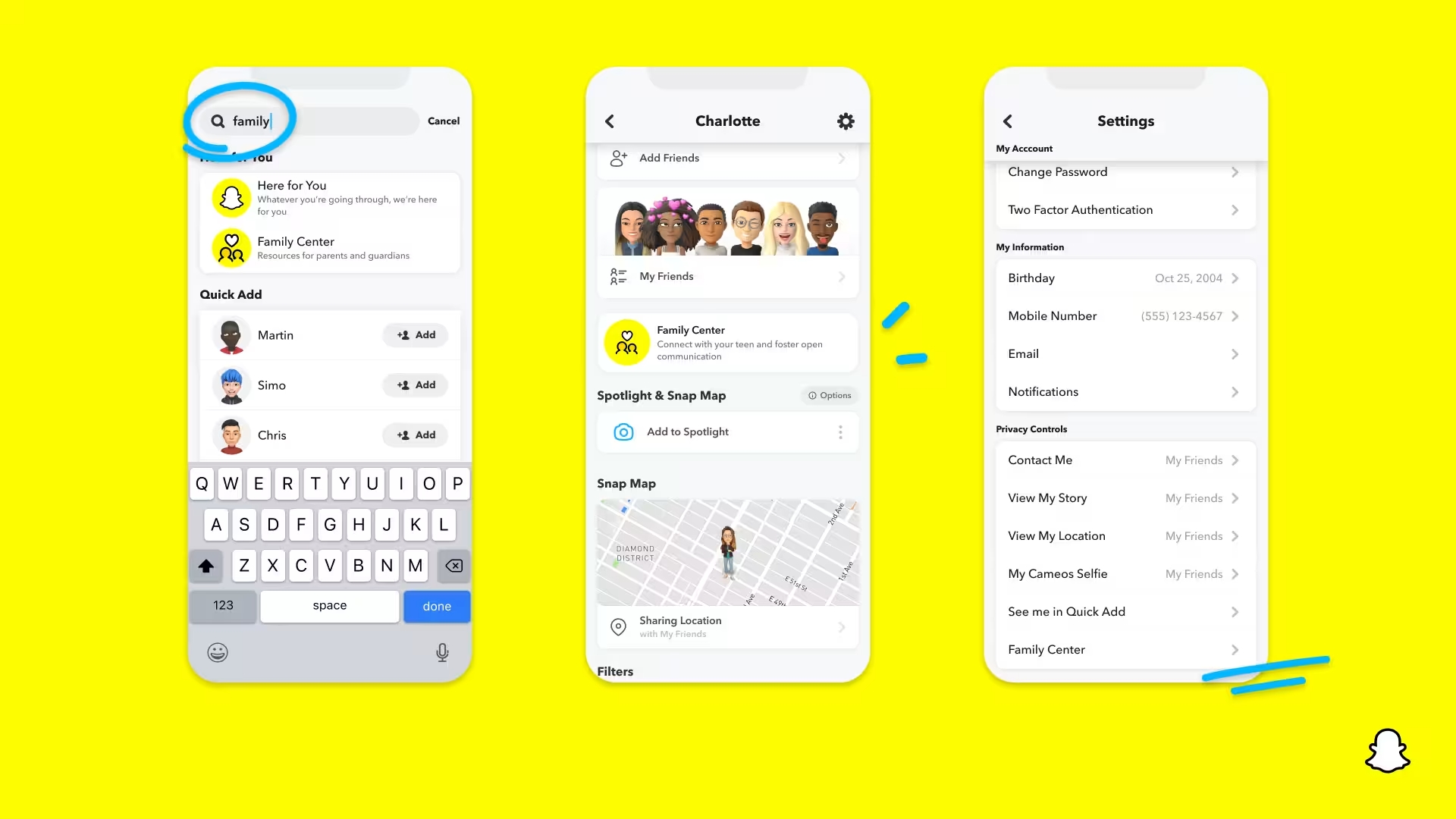

The tool builds on YouTube’s current parental controls that allow parents to test supervised accounts with children under the age of consent for online services, which is 13 in the U.S. It’s worth noting that other social apps, like TikTok, Snapchat, Instagram and Facebook, also offer supervised accounts linked to young users’ parents.