It’s not every day you come across kombucha playing a starring role in potential industrial disruption. But here at 4YFN in MWC we got chatting to Laura Freixas about her PhD research project that’s using a base of the fermented hipster tea to “upcycle” organic waste into filaments.

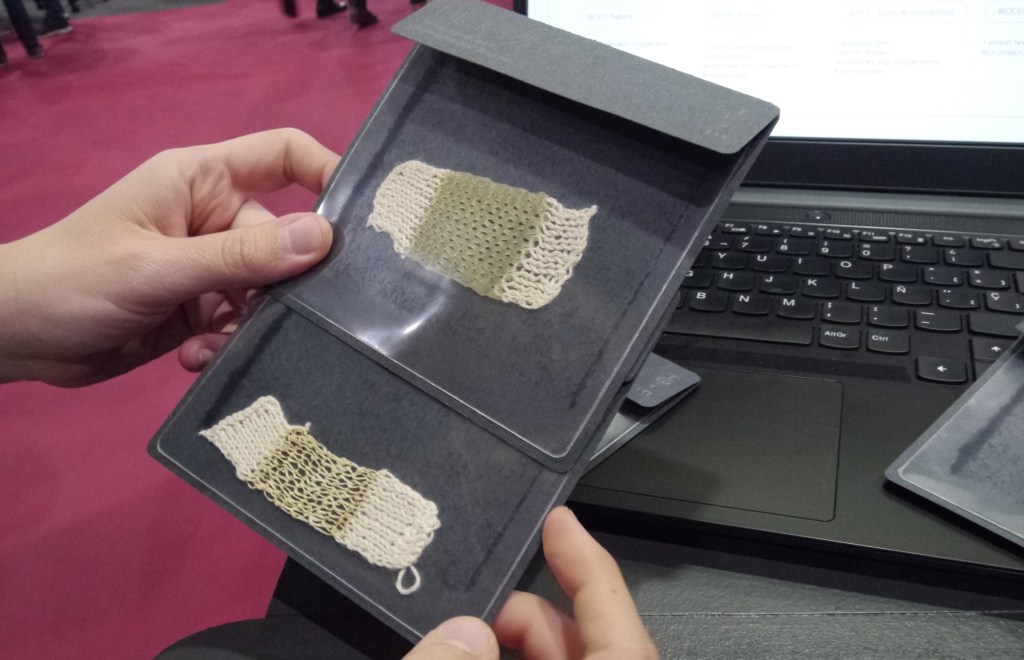

Once processed, these biodegradable threads can be knitted into fabrics. They can also be treated to have different properties — such as elasticity or water resistance. Freixas had a selection of samples of knitted bio-filament on show, offering a glimpse of eco-friendly alternative to materials like cotton or plastic essentially being brewed into existence.

Freixas is undertaking the project at the Barcelona School of Design and Engineering as part of the Elisava Research team. They’re aiming to commercialize the bio-filament — which they’re calling Biocel. “The aim is to bio-fabricate filaments from organic waste because we have seen several problems in the textile industry,” she said, highlighting the sector’s many challenges.

While multiple startups have been putting effort into developing eco-friendly leathers in recent years, including fungi-based biomaterials from the likes of Bolt Threads, Mycel and MycoWorks, Freixas says less attention has been paid to devising more environmentally friendly filaments for use in fabric production — despite the textile industry’s heavy use of chemicals, energy and water; major problems with pollution and waste; and an ongoing record of human rights violations linked to poor working conditions.

Unlike conventional fabric production the methods involved in producing Biocel are not labor intensive and do not require lots of energy or harsh chemicals, per Freixas. “This filament is produced with low levels of thermal energy/electricity and no hazardous chemicals,” she told TechCrunch. “Then we obtain a biodegradable filament that we can functionalize, or give properties, to be more elastic, rigid or hydrophobic and make a textile application.”

As with making kombucha, the feedstock for producing the bio-filament needs to have some sugars for the bacteria to work their fermenting magic. Which means some agricultural waste will be better suited — such as grape waste (from wine production), or the cereals left over from brewing beer — owing to relatively high sugar content.

“Between 15% and 50% [of agricultural products] become waste when they are processed. Here we see an opportunity,” she said, pointing to rising regulatory requirements in the European Union aimed at cutting carbon emissions and promoting circularity that are shifting incentives. It could even lead to a situation in which industrial producers pay upcyclers to take their waste off their hands, she suggested.

“Regarding technology, we are building our machine to automatize and monitor the production,” she said. “So we are building a digital platform to control the production. And then we also have a patent pending method for the tension of the filaments.”

Future applications for the bio-filament could include weaving it into accessories such as shoes and bags for the fashion industry; making biodegradable netting for product packaging; or textiles for furniture, according to Freixas.

Currently she said the bio-filament is not ideal for use cases where the knitted material would be in direct contact with people’s skin, owing to a relatively rough texture, but suggested more research could help finesse the finish as they continue to experiment with applying different treatments.

“At this point what we are looking for is for a company that has a need — a real need — so we can develop an application together and put it in the market so we can validate and then scale it,” she added.