Trending News

17 October, 2024

6.54°C New York

LAION, the German research org that created the data used to train Stable Diffusion, among other generative AI models, has released a new dataset that it claims has been “thoroughly cleaned of known links to suspected child sexual abuse material (CSAM).”

The new dataset, Re-LAION-5B, is actually a re-release of an old dataset, LAION-5B — but with “fixes” implemented with recommendations from the nonprofit Internet Watch Foundation, Human Rights Watch, the Canadian Center for Child Protection and the now-defunct Stanford Internet Observatory. It’s available for download in two versions, Re-LAION-5B Research and Re-LAION-5B Research-Safe (which also removes additional NSFW content), both of which were filtered for thousands of links to known — and “likely” — CSAM, LAION says.

“LAION has been committed to removing illegal content from its datasets from the very beginning and has implemented appropriate measures to achieve this from the outset,” LAION wrote in a blog post. “LAION strictly adheres to the principle that illegal content is removed ASAP after it becomes known.”

Important to note is that LAION’s datasets don’t — and never did — contain images. Rather, they’re indexes of links to images and image alt text that LAION curated, all of which came from a different dataset — the Common Crawl — of scraped sites and web pages.

The release of Re-LAION-5B comes after an investigation in December 2023 by the Stanford Internet Observatory that found that LAION-5B — specifically a subset called LAION-5B 400M — included at least 1,679 links to illegal images scraped from social media posts and popular adult websites. According to the report, 400M also contained links to “a wide range of inappropriate content including pornographic imagery, racist slurs, and harmful social stereotypes.”

While the Stanford co-authors of the report noted that it would be difficult to remove the offending content and that the presence of CSAM doesn’t necessarily influence the output of models trained on the dataset, LAION said it would temporarily take LAION-5B offline.

The Stanford report recommended that models trained on LAION-5B “should be deprecated and distribution ceased where feasible.” Perhaps relatedly, AI startup Runway recently took down its Stable Diffusion 1.5 model from the AI hosting platform Hugging Face; we’ve reached out to the company for more information. (Runway in 2023 partnered with Stability AI, the company behind Stable Diffusion, to help train the original Stable Diffusion model.)

Of the new Re-LAION-5B dataset, which contains around 5.5 billion text-image pairs and was released under an Apache 2.0 license, LAION says that the metadata can be used by third parties to clean existing copies of LAION-5B by removing the matching illegal content.

LAION stresses that its datasets are intended for research — not commercial — purposes. But, if history is any indication, that won’t dissuade some organizations. Beyond Stability AI, Google once used LAION datasets to train its image-generating models.

“In all, 2,236 links [to suspected CSAM] were removed after matching with the lists of link and image hashes provided by our partners,” LAION continued in the post. “These links also subsume 1008 links found by the Stanford Internet Observatory report in December 2023 … We strongly urge all research labs and organizations who still make use of old LAION-5B to migrate to Re-LAION-5B datasets as soon as possible.”

The U.S. Federal Trade Commission (FTC), along with two other international consumer protection networks, announced on Thursday the results of a study into the use of “dark patterns” — or manipulative design techniques — that can put users’ privacy at risk or push them to buy products or services or take other actions they otherwise wouldn’t have. In an analysis of 642 websites and apps offering subscription services, the study found that the majority (nearly 76%) used at least one dark pattern and nearly 67% used more than one.

Dark patterns refer to a range of design techniques that can subtly encourage users to take some sort of action or put their privacy at risk. They’re particularly popular among subscription websites and apps and have been an area of focus for the FTC in previous years. For instance, the FTC sued dating app giant Match for fraudulent practices, which included making it difficult to cancel a subscription through its use of dark patterns.

The release of the new report could signal that the FTC is planning to pay increased attention to this type of consumer fraud. The report also arrives as the U.S. Department of Justice is suing Apple over its alleged App Store monopoly — a marketplace that generates billions in billings and sales for digital goods and services, including those that come through subscription apps.

The new report published Thursday dives into the many types of dark patterns like sneaking, obstruction, nagging, forced action, social proof and others.

Sneaking was among the most common dark patterns encountered in the study, referring to the inability to turn off the auto-renewal of subscriptions during the sign-up and purchase process. Eighty-one percent of sites and apps studied used this technique to ensure their subscriptions were renewed automatically. In 70% of cases, the subscription providers didn’t provide information on how to cancel a subscription, and 67% failed to provide the date by which a consumer needed to cancel in order to not be charged again.

Obstruction is another common one found in subscription apps; it makes it more difficult or tedious to take a certain action, like canceling a subscription or bypassing the sign-up for the free trial, where the “X” to close the offer is grayed out and somewhat hidden from view.

Nagging involves repeatedly asking the consumer to perform some sort of action that the business wants them to take. (Though not a subscription app, one example of nagging is how TikTok often repeatedly prompts users to upload their contacts to the app, even after the user has said no.)

Forced action means requiring the consumer to take some sort of step to access specific functionality, like filling out their payment details to participate in a free trial — something that 66.4% of the websites and apps in the study had required.

Social proof, meanwhile, uses the power of the crowd to influence a consumer, usually to make a purchase, by displaying metrics related to some sort of activity. This is particularly popular in the e-commerce industry, where a company will display how many others are browsing the same product or adding it to their cart. For subscription apps, social proof may be used to push users to enroll in the subscription by showing how many others are doing the same.

The study found that 21.5% of websites and apps they examined had used notifications and other forms of social proof to push consumers toward enrolling in a subscription.

Sites can also try to instill a sense of urgency to get consumers to buy. This is something seen regularly on Amazon and other e-commerce sites, where people are alerted to low stock, prompting them to check out quickly, but may be less commonly used to sell subscriptions.

Interface interference is a broad category that refers to ways the app or website is designed to push the consumer to make a decision that’s favorable for a business. This could include things like pre-selecting items, like longer or more expensive subscriptions — as 22.5% of those studied did — or using a “false hierarchy” to visually present more favorable options for the business more prominently. The latter was used by 38.3% of businesses in the study.

Interface interference could also involve something the study referred to as “confirmshaming” — meaning using language to evoke an emotion to manipulate the consumer’s decision-making process, like “I don’t want to miss out, subscribe me!”

The study was conducted from January 29 through February 2 as part of the International Consumer Protection and Enforcement Network’s (ICPEN) annual review, and included 642 websites and apps offering subscriptions. The FTC is assuming the presidency role at ICPEN for the 2024-2025 timeframe, it noted. Officials from 27 authorities in 26 countries participated in this study, using dark pattern descriptions set up by the Organization for Economic Cooperation and Development. However, the scope of their work was not to determine if any of the practices were unlawful in the impacted countries; that’s for the individual governments to decide.

The FTC participated in ICPEN’s review, which was also coordinated with the Global Privacy Enforcement Network, a network of more than 80 privacy enforcement authorities.

This isn’t the first time the FTC has examined the use of dark patterns. In 2022, it also authored a report that detailed a range of dark patterns, but that wasn’t limited to only subscription websites and apps. Instead, the older report looked at dark patterns across industries, including e-commerce and children’s apps, as well as different types of dark patterns, like those used in cookie consent banners and more.

The U.S. Federal Trade Commission (FTC), along with two other international consumer protection networks, announced on Thursday the results of a study into the use of “dark patterns” — or manipulative design techniques — that can put users’ privacy at risk or push them to buy products or services or take other actions they otherwise wouldn’t have. In an analysis of 642 websites and apps offering subscription services, the study found that the majority (nearly 76%) used at least one dark pattern and nearly 67% used more than one.

Dark patterns refer to a range of design techniques that can subtly encourage users to take some sort of action or put their privacy at risk. They’re particularly popular among subscription websites and apps and have been an area of focus for the FTC in previous years. For instance, the FTC sued dating app giant Match for fraudulent practices, which included making it difficult to cancel a subscription through its use of dark patterns.

The release of the new report could signal that the FTC is planning to pay increased attention to this type of consumer fraud. The report also arrives as the U.S. Department of Justice is suing Apple over its alleged App Store monopoly — a marketplace that generates billions in billings and sales for digital goods and services, including those that come through subscription apps.

The new report published Thursday dives into the many types of dark patterns like sneaking, obstruction, nagging, forced action, social proof and others.

Sneaking was among the most common dark patterns encountered in the study, referring to the inability to turn off the auto-renewal of subscriptions during the sign-up and purchase process. Eighty-one percent of sites and apps studied used this technique to ensure their subscriptions were renewed automatically. In 70% of cases, the subscription providers didn’t provide information on how to cancel a subscription, and 67% failed to provide the date by which a consumer needed to cancel in order to not be charged again.

Obstruction is another common one found in subscription apps; it makes it more difficult or tedious to take a certain action, like canceling a subscription or bypassing the sign-up for the free trial, where the “X” to close the offer is grayed out and somewhat hidden from view.

Nagging involves repeatedly asking the consumer to perform some sort of action that the business wants them to take. (Though not a subscription app, one example of nagging is how TikTok often repeatedly prompts users to upload their contacts to the app, even after the user has said no.)

Forced action means requiring the consumer to take some sort of step to access specific functionality, like filling out their payment details to participate in a free trial — something that 66.4% of the websites and apps in the study had required.

Social proof, meanwhile, uses the power of the crowd to influence a consumer, usually to make a purchase, by displaying metrics related to some sort of activity. This is particularly popular in the e-commerce industry, where a company will display how many others are browsing the same product or adding it to their cart. For subscription apps, social proof may be used to push users to enroll in the subscription by showing how many others are doing the same.

The study found that 21.5% of websites and apps they examined had used notifications and other forms of social proof to push consumers toward enrolling in a subscription.

Sites can also try to instill a sense of urgency to get consumers to buy. This is something seen regularly on Amazon and other e-commerce sites, where people are alerted to low stock, prompting them to check out quickly, but may be less commonly used to sell subscriptions.

Interface interference is a broad category that refers to ways the app or website is designed to push the consumer to make a decision that’s favorable for a business. This could include things like pre-selecting items, like longer or more expensive subscriptions — as 22.5% of those studied did — or using a “false hierarchy” to visually present more favorable options for the business more prominently. The latter was used by 38.3% of businesses in the study.

Interface interference could also involve something the study referred to as “confirmshaming” — meaning using language to evoke an emotion to manipulate the consumer’s decision-making process, like “I don’t want to miss out, subscribe me!”

The study was conducted from January 29 through February 2 as part of the International Consumer Protection and Enforcement Network’s (ICPEN) annual review, and included 642 websites and apps offering subscriptions. The FTC is assuming the presidency role at ICPEN for the 2024-2025 timeframe, it noted. Officials from 27 authorities in 26 countries participated in this study, using dark pattern descriptions set up by the Organization for Economic Cooperation and Development. However, the scope of their work was not to determine if any of the practices were unlawful in the impacted countries; that’s for the individual governments to decide.

The FTC participated in ICPEN’s review, which was also coordinated with the Global Privacy Enforcement Network, a network of more than 80 privacy enforcement authorities.

This isn’t the first time the FTC has examined the use of dark patterns. In 2022, it also authored a report that detailed a range of dark patterns, but that wasn’t limited to only subscription websites and apps. Instead, the older report looked at dark patterns across industries, including e-commerce and children’s apps, as well as different types of dark patterns, like those used in cookie consent banners and more.

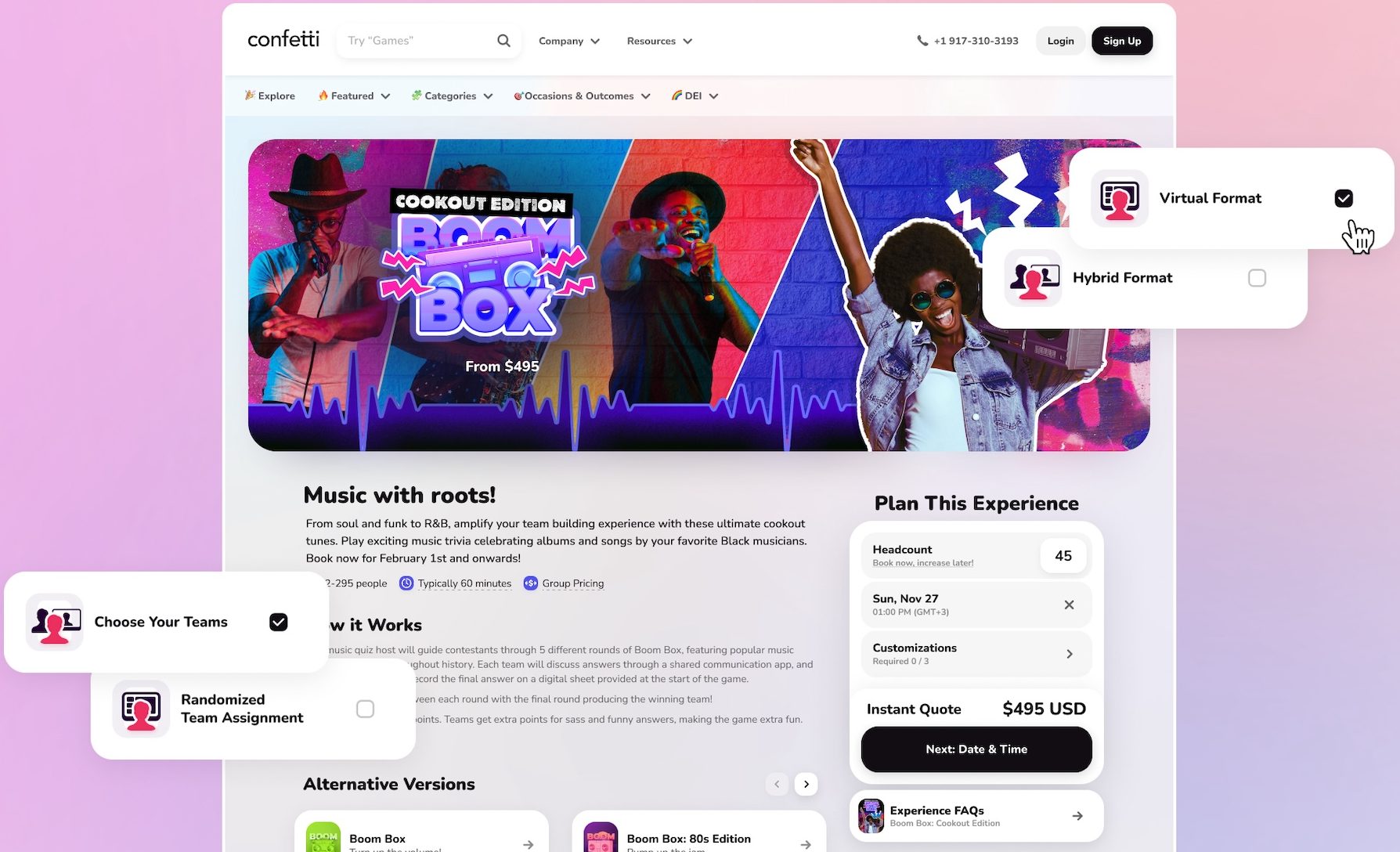

Not many startups can claim Apple, Google, Microsoft, Amazon and Meta as paying customers, but Confetti can. And the list doesn’t stop at a quintet with a collective market value of $10 trillion — the New York-based company says it works with Zoom, Netflix, Stripe, TikTok, Shopify, Adobe, LinkedIn, HubSpot and 30% of the Fortune 500.

That’s some 8,000 companies in total. Not bad going for an events and team-building startup blindsided by a pandemic that pushed much of its target market to hunker down behind closed doors, forcing Confetti to rebuild its business model in a matter of weeks.

Most companies would be happy to have just a couple of the trillion-dollar tech giants on Confetti’s customer list, which is why it’s particularly notable that a fairly under-the-radar startup can lay claim to so many big-name logos.

“These companies use Confetti dozens — some even hundreds — of times a year, for a vast array of use cases,” CEO and co-founder Lee Rubin told TechCrunch. “Such as seasonal occasions like Halloween, Black History Month, holiday parties or goal-driven activities like competition-building, communication or wellness.”

Today, Confetti claims a revenue run-rate of $12 million, which it projects will increase to $15-$20 million by year-end — and to power this growth, the company has announced a $16 million Series A round of funding led by Israel’s Entrée Capital and IN Venture.

Companies use Confetti to bring team-building experiences to their remote teams, with support for hybrid too.

Want to foster camaraderie via virtual baking or cocktail-making? Confetti sorts it all out, including liaising with local suppliers and shipping ingredients direct to employees. Fancy some interactive games such as escape rooms, quizzes, Pictionary or charades? Again, Confetti sorts it all out — all games are designed in-house, from ideation through to creation.

“The platform takes care of the entire event execution process,” Rubin said.

This wasn’t always the case though — Confetti started out more as a marketplace connecting companies with event providers. However, that still bestowed a big responsibility on the organizer to join the dots and ensure a memorable event for all concerned — this is why Confetti segued into more of an end-to-end platform that sorts it all out from start to finish.

“A marketplace only solves the issue of finding ideas and vendors to potentially provide them, but the entire burden of quality assurance, and event execution, still falls on the customer having to communicate directly with the vendor,” Rubin said. “Additionally, most event providers are not corporate focused, they offer the same content to any group for any purpose. We understand that our customers need content that’s tailored to the cultural challenges they face in a work environment.”

Rubin also draws analogies with how Netflix — one of its customers — started out as a content aggregator that transitioned into a content producer to give it greater control of the product it sells.

“[It’s all about] creating the content from scratch, in a consistent way across all our experiences that’s tailored to building a remote or hybrid work culture,” Rubin said.

In a prior job, Rubin says she was tasked with organizing a team-building event, an endeavor that proved more difficult than she initially envisaged.

“At first, I thought it would be easy, but then I realized how many things go into it — coming up with ideas, looking for vendors online, negotiating with them — all with fingers-crossed that my team will have a good time,” Rubin explained. “So I asked myself: Is there an easier way to create meaningful events that are simple to plan?”

And so in 2017, Rubin and her CTO co-founder Eyal Hakim launched Confetti, designed to remove the complexities of organizing team events and experiences. But that was seven years ago — a lifetime away in terms of where the world was at with attitudes toward remote working. The pandemic forced pretty much every company to embrace remote work, meaning that Rubin and Hakim had to switch things up pretty quickly.

“When we started, we were solely focused on in-person events, helping companies organize events in the office,” Rubin said. “When the pandemic hit, we quickly had to pivot, and after two exhausting weeks, our prototype was already up, running and ready to sell. I believe this quick shift is a testament to how startups must be able to stay agile and look at challenges as opportunities to grow beyond the original boundaries.”

Moreover, the pivot seems to have been a net benefit for Confetti, in terms of enabling it to tap a much larger market.

“After switching to virtual events, we realized that this path was way more scalable — you can serve customers around the world without relying on a physical presence,” Rubin said. “So in a way, we really took the most out of the constraints the pandemic imposed on our business.”

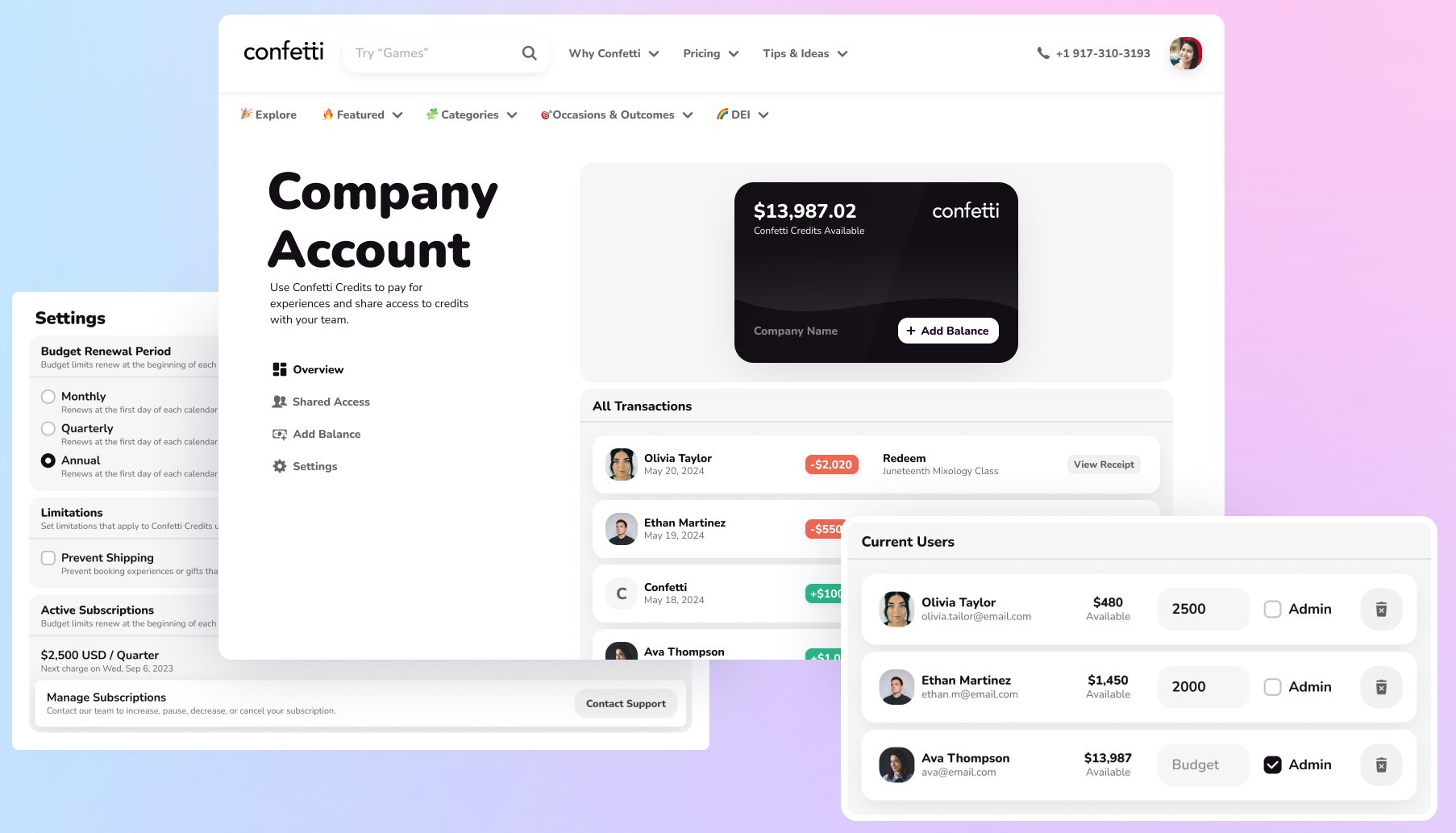

Prior to now, Confetti had raised around $2 million across various seed, angel and venture rounds, with backers including Correlation Ventures, Entrepreneurs Roundtable Accelerator and Delivery Hero co-founder and CEO Niklas Östberg. With another $16 million in the bank, Confetti is well-financed to “shape the future of remote-work culture,” as the company puts it, and this will involve building products around a recurring revenue model with support for multi-team companies.

As Confetti began working with more companies, it came to realize that — with bigger companies in particular — there may be multiple different people from different teams using the Confetti platform. This was a consequence of the product-led growth strategy it had used from the get-go, which is partly why it has turned to dedicated sales teams to ensure a more cohesive “company” experience, replete with different pricing structures and packages to keep them coming back.

“Now that we have thousands of customers, we realized that within each company we often have many different employees who found us separately and are booking from us completely separately,” Rubin said. “To offer our customers even more value, we decided to establish a sales team for the first time who would speak to decision-makers at these companies to offer them Confetti as a company-wide solution for their team-building strategy. With this, we introduced our new ‘company plan,’ which, compared to our pay-as-you-go plan, offers companies many additional benefits and incentives.”

Today, a big part of Confetti’s offering is automation — workflows configured to remove many of the manual steps involved in organizing an event or team-building experience. For instance, once a company has provided all the required details for the booking, hosts are scheduled in (and paid on completion), virtual event rooms are set up, kits are scheduled for shipping and invites sent out, and feedback solicited.

It’s this automation that Rubin believes is one of the elements that sets it apart from other players. However, it’s worth noting that competitors such as Luna Park, Teambuilding.com, Outback Team Building and Marco Experiences also claim some of the very same prestigious customers that Confetti does, meaning that organizations aren’t putting all their team-building eggs in the same basket.

So while there is competition, for sure, Confetti is pushing to position it as a stickier proposition for businesses — particularly in the enterprise segment. While most of its experiences were historically charged on a per-event basis based on headcount, Confetti is pushing for more sustainable recurring revenue streams.

“We’re already providing companies with options to purchase Confetti Credits, which can be used to buy experiences on our platform,” Rubin said. “Additionally, we offer subscriptions to Confetti Credits (with incentives), enabling planners and companies to plan and execute their year-round virtual team-building strategy seamlessly. In 2024, we’ll increasingly rely on packages and subscriptions, particularly as we scale our SLG [sales-led growth] motion.”

On Thursday, Apple announced that it has opened its iPhone repair process to include used components. Starting this fall, customers and independent repair shops will be able to fix the handset using compatible components.

Components that don’t require configuration (such as volume buttons) were already capable of being harvested from used devices. Today’s news adds all components — including the battery, display and camera — which Apple requires to be configured for full functionality. Face ID will not be available when the feature first rolls out, but it is coming down the road.

At launch, the feature will be available solely for the iPhone 15 line on both the supply and receiving ends of the repair. That caveat is due, in part, to limited interoperability between the models. In many cases, parts from older phones simply won’t fit. The broader limitation that prohibited the use of components from used models comes down to a process commonly known as “parts paring.”

Apple has defended the process, stating that using genuine components is an important aspect of maintaining user security and privacy. Historically, the company hasn’t used the term “parts pairing” to refer to its configuration process, but it acknowledges that phrase has been widely adopted externally. It’s also aware that the term is loaded in many circles.

“‘Parts pairing’ is used a lot outside and has this negative connotation,” Apple senior vice president of hardware engineering, John Ternus, tells TechCrunch. “I think it’s led people to believe that we somehow block third-party parts from working, which we don’t. The way we look at it is, we need to know what part is in the device, for a few reasons. One, we need to authenticate that it’s a real Apple biometric device and that it hasn’t been spoofed or something like that. … Calibration is the other one.”

Right-to-repair advocates have accused Apple of hiding behind parts pairing as an excuse to stifle user-repairability. In January, iFixit called the process the “biggest threat to repair.” The post paints a scenario wherein an iPhone user attempts to harvest a battery from a friend’s old device, only to be greeted with a pop-up notification stating, “Important Battery Message. Unable to verify this iPhone has a genuine Apple battery.”

It’s a real scenario and surely one that’s proven confusing for more than a few people. After all, a battery that was taken directly from another iPhone is clearly the real deal.

Today’s news is a step toward resolving the issue on newer iPhones, allowing the system to effectively verify that the battery being used is, in fact, genuine.

“Parts pairing, regardless of what you call it, is not evil,” says Ternus. “We’re basically saying, if we know what module’s in there, we can make sure that when you put our module in a new phone, you’re gonna get the best quality you can. Why’s that a bad thing?”

The practice took on added national notoriety when it was specifically targeted by Oregon’s recently passed right-to-repair bill. Apple, which has penned an open letter in support of a similar California bill, heavily criticized the bill’s parts pairing clause.

“Apple supports a consumer’s right to repair, and we’ve been vocal in our support for both state and federal legislation,” a spokesperson for the company noted in March. “We support the latest repair laws in California and New York because they increase consumer access to repair while keeping in place critical consumer protections. However, we’re concerned a small portion of the language in Oregon Senate Bill 1596 could seriously impact the critical and industry-leading privacy, safety and security protections that iPhone users around the world rely on every day.”

While aspects of today’s news will be viewed as a step in the right direction among some repair advocates, it seems unlikely that it will make the iPhone wholly compliant with the Oregon bill. Apple declined to offer further speculation on the matter.

Biometrics — including fingerprint and facial scans — continue to be a sticking point for the company.

“You think about Touch ID and Face ID and the criticality of their security because of how much of our information is on our phones,” says Ternus. “Our entire life is on our phones. We have no way of validating the performance of any third-party biometrics. That’s an area where we don’t enable the use of third-party modules for the key security functions. But in all other aspects, we do.”

It doesn’t seem coincidental that today’s news is being announced within weeks of the Oregon bill’s passage — particularly given that these changes are set to roll out in the fall. The move also appears to echo Apple’s decision to focus more on user-repairability with the iPhone 14, news that arrived amid a rising international call for right-to-repair laws.

Apple notes, however, that the processes behind this work were set in motion some time ago. Today’s announcement around device harvesting, for instance, has been in the works for two years.

For his part, Ternus suggests that his team has been focused on increasing user access to repairs independent of looming state and international legislation. “We want to make things more repairable, so we’re doing that work anyway,” he says. “To some extent, with my team, we block out the news of the world, because we know what we’re doing is right, and we focus on that.”

Overall, the executive preaches a kind of right tool for the right job philosophy to product design and self-repair.

“Repairability in isolation is not always the best answer,” Ternus says. “One of the things that I worry about is that people get very focused as if repairability is the goal. The reality is repairability is a means to an end. The goal is to build products that last, and if you focus too much on [making every part repairable], you end up creating some unintended consequences that are worse for the consumer and worse for the planet.”

Also announced this morning is an enhancement to Activation Lock, which is designed to deter thieves from harvesting stolen phones for parts. “If a device under repair detects that a supported part was obtained from another device with Activation Lock or Lost Mode enabled,” the company notes, “calibration capabilities for that part will be restricted.”

Ternus adds that, in addition to harvesting used iPhones for parts, Apple “fundamentally support[s] the right for people to use third-party parts as well.” Part of that, however, is enabling transparency.

“We have hundreds of millions of iPhones in use that are second- or third-hand devices,” he explains. “They’re a great way for people to get into the iPhone experience at a lower price point. We think it’s important for them to have the transparency of: was a repair done on this device? What part was used? That sort of thing.”

When iOS 15.2 arrived in November 2021, it introduced a new feature called “iPhone parts and service history.” If your phone is new and has never been repaired, you simply won’t see it. If one of those two qualifications does apply to your device, however, the company surfaces a list of switched parts and repairs in Settings.

Ternus cites a recent UL Solutions study as evidence that third-party battery modules, in particular, can present a hazard to users.

“We don’t block the use of third-party batteries,” he says. “But we think it’s important to be able to notify the customer that this is or isn’t an authentic Apple battery, and hopefully that will motivate some of these third parties to improve the quality.”

While the fall update will open harvesting up to a good number of components, Apple has no plans to sell refurbished parts for user repairs.

The FCC has proposed a $6 million fine for the scammer who used voice-cloning tech to impersonate President Biden in a series of illegal robocalls during a New Hampshire primary election. It’s more about robocalls than AI, but the agency is clearly positioning this as a warning to other would-be high-tech scammers.

As you may recall, in January, many voters in New Hampshire received a call purporting to be a message from the president telling them not to vote in the upcoming primary. This was, of course, fake — a voice clone of President Biden using tech that has become widely available over the last couple years.

While creating a fake voice has been possible for a long time, generative AI platforms have made it trivial: Dozens of services offer cloned voices with few restrictions or oversight. You can make your own Biden voice pretty easily with a minute or two of his speeches, which naturally are easily found online.

What you can’t do, the FCC and several law enforcement agencies have made clear, is use that fake Biden to suppress voters, via robocalls that were already illegal.

“We will act swiftly and decisively to ensure that bad actors cannot use U.S. telecommunications networks to facilitate the misuse of generative AI technology to interfere with elections, defraud consumers, or compromise sensitive data,” said chief of the FCC’s Enforcement Bureau, Loyaan Egal, in a press release.

“Political consultant” Steve Kramer was the primary perpetrator, though he enlisted the help of the shady Life Corporation (previously charged with illegal robocalls) and the calling services of shady telecom Lingo, AKA Americatel, AKA BullsEyeComm, AKA Clear Choice Communications, AKA Excel Telecommunications, AKA Impact Telecom, AKA Matrix Business Technologies, AKA Startec Global Communications, AKA Trinsic Communications, AKA VarTec Telecom.

Kramer is “apparently” in violation of several rules — but as yet there are no criminal proceedings against him or his collaborators. This is a limitation of the FCC’s power: They must work with local or federal law enforcement to put weight behind their determinations of liability as an expert agency.

The $6 million fine is more like a ceiling or aspiration; as with the FTC and others, the actual amount paid is often far less for numerous reasons, but even so, it’s a significant sum. The next step is for Kramer to respond to the allegations, though separate actions are being taken against Lingo, or whatever they call themselves now that they’ve been caught again, which may result in fines or lost licenses.

AI-generated voices were officially declared illegal to use in robocalls in February, after the case above prompted the question of whether they counted as “artificial” — and the FCC decided, quite sensibly, that they do.

FCC officially declares AI-voiced robocalls illegal

We’re launching an AI newsletter! Sign up here to start receiving it in your inboxes on June 5.